用群晖做网站服务器wordpress 点赞功能

文章目录

- 1.创建Maven时: idea报错为:java:错误:不支持发行版本5

- 2. Springbot启动报错-类文件具有错误的版本 61.0, 应为 52.0

1.创建Maven时: idea报错为:java:错误:不支持发行版本5

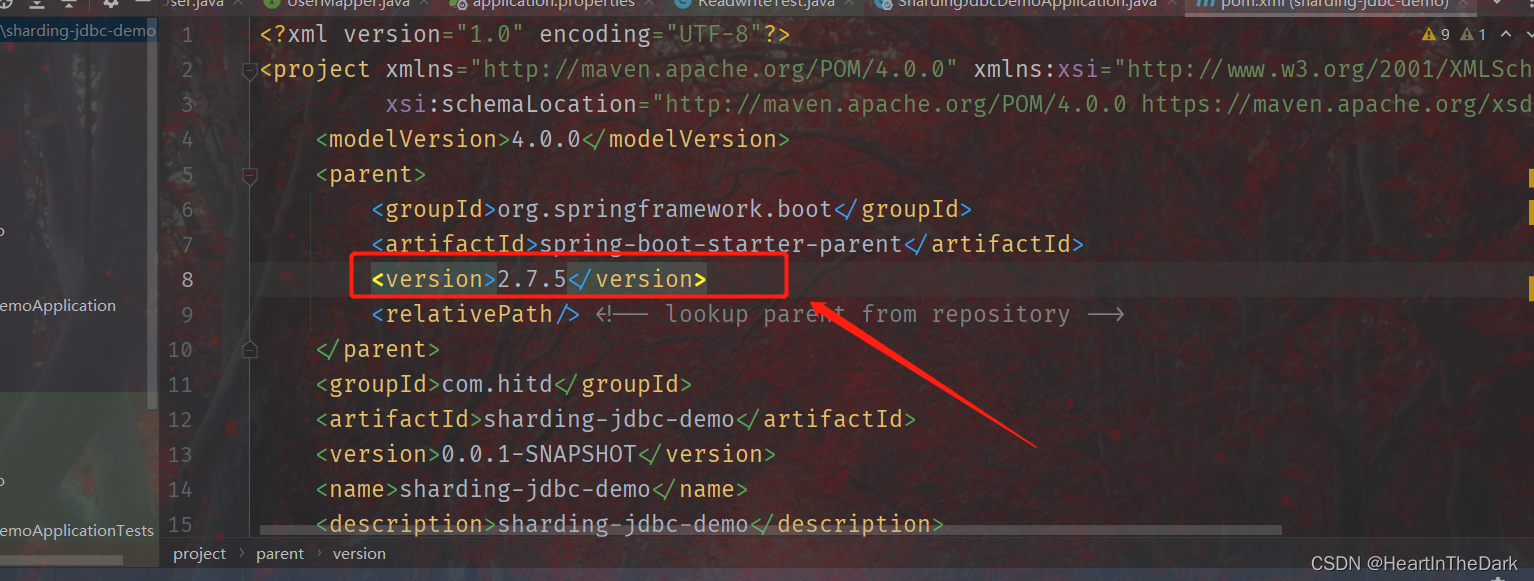

在 maven地址\conf\setting.xml

中设置默认jdk版本…

把图中代码添加到…中

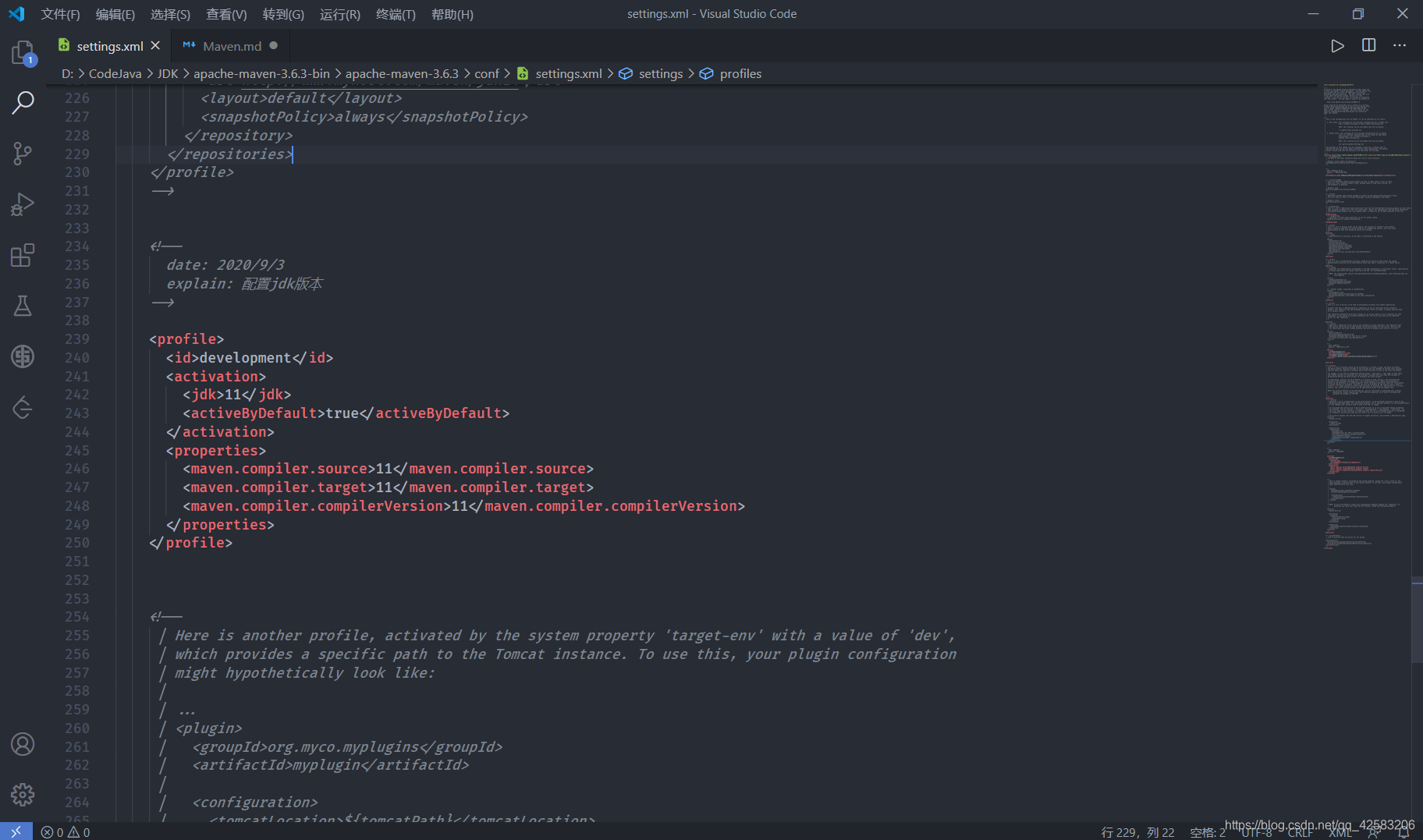

2. Springbot启动报错-类文件具有错误的版本 61.0, 应为 52.0

原因

SpringBoot使用了3.0或者3.0以上,因为Spring官方发布从Spring6以及SprinBoot3.0开始最低支持JDK17,所以仅需将SpringBoot版本降低为3.0以下即可。

将SpringBoot版本降低为3.0以下 版本随意,刷新Maven重启即可