淘宝网站建设方案模板深圳做网站排名

文章目录

- 环境

- 流量分析

- Pod 间

- Node 到 Pod

- Pod 到 service

- Node 到 service

- NetworkPolicy

理清和观测网络流量

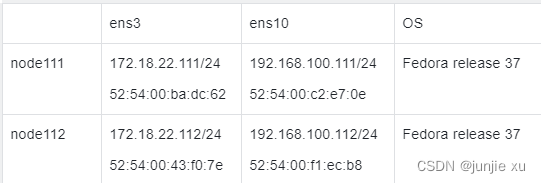

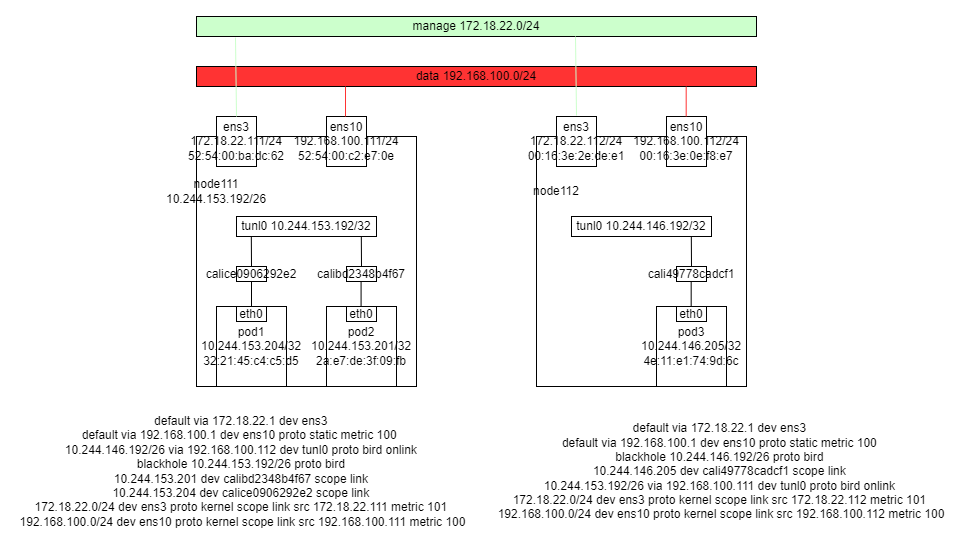

环境

可以看到,在宿主机上有到每个 pod IP 的路由指向 veth 设备

到对端节点网段的路由 指向 tunl0 下一跳 ens10 的 ip

有到本节点网段 第一个 ip 即 tunl0 的流量 指向 blackhole,丢弃

流量分析

Pod 间

- 同 node 不同 pod 之间

pod1 <-> pod2

- 在 pod1 eth0 抓包:

00:37:59.442570 32:21:45:c4:c5:d5 > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 98: 10.244.153.204 > 10.244.153.201: ICMP echo request, id 56616, seq 1, length 64

00:37:59.442707 ee:ee:ee:ee:ee:ee > 32:21:45:c4:c5:d5, ethertype IPv4 (0x0800), length 98: 10.244.153.201 > 10.244.153.204: ICMP echo reply, id 56616, seq 1, length 64

pod1 中下一跳都是 169.254.1.1,且目的 mac 是 ee:ee:ee:ee:ee:ee

2. 在 host 端 calice0906292e2 抓包不变

3. 匹配主机路由 10.244.153.201 dev cali118af4ccd16 scope link 和 neighbor 10.244.153.201 dev calibd2348b4f67 lladdr f2:d4:17:63:9d:3d REACHABLE, 在 cali118af4ccd16 抓包

00:41:07.879975 ee:ee:ee:ee:ee:ee > f2:d4:17:63:9d:3d, ethertype IPv4 (0x0800), length 98: 10.244.153.204 > 10.244.153.201: ICMP echo request, id 56616, seq 185, length 64

00:41:07.879998 f2:d4:17:63:9d:3d > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 98: 10.244.153.201 > 10.244.153.204: ICMP echo reply, id 56616, seq 185, length 64

- 在 pod2 内 eth0 抓包:

00:43:59.911019 ee:ee:ee:ee:ee:ee > f2:d4:17:63:9d:3d, ethertype IPv4 (0x0800), length 98: 10.244.153.204 > 10.244.153.201: ICMP echo request, id 56616, seq 353, length 64

00:43:59.911056 f2:d4:17:63:9d:3d > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 98: 10.244.153.201 > 10.244.153.204: ICMP echo reply, id 56616, seq 353, length 64

- 不同 node 上 pod 之间

pod1 访问 pod3

在 veth host 端抓包

20:22:47.858674 32:21:45:c4:c5:d5 > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 98: 10.244.153.204 > 10.244.146.205: ICMP echo request, id 17555, seq 106, length 64

20:22:47.860043 ee:ee:ee:ee:ee:ee > 32:21:45:c4:c5:d5, ethertype IPv4 (0x0800), length 98: 10.244.146.205 > 10.244.153.204: ICMP echo reply, id 17555, seq 106, length 64

看 主机路由 10.244.146.192/26 via 192.168.100.112 dev tunl0 proto bird onlink 下一跳是 tunl0

在 tunl0 上抓包,没有 二层信息。

20:24:42.016898 ip: 10.244.153.204 > 10.244.146.205: ICMP echo request, id 17555, seq 220, length 64

20:24:42.022282 ip: 10.244.146.205 > 10.244.153.204: ICMP echo reply, id 17555, seq 220, length 64

在 业务网卡抓包,可以看到 mac 地址是业务网卡两个端点的 mac。外层 IP 是 业务网卡两个端点的 IP,内层是 icmp 报文。

20:25:41.151109 52:54:00:dc:c7:b4 > 52:54:00:d3:bf:21, ethertype IPv4 (0x0800), length 118: 192.168.100.111 > 192.168.100.112: 10.244.153.204 > 10.244.146.205: ICMP echo request, id 17555, seq 279, length 64

20:25:41.152198 52:54:00:d3:bf:21 > 52:54:00:dc:c7:b4, ethertype IPv4 (0x0800), length 118: 192.168.100.112 > 192.168.100.111: 10.244.146.205 > 10.244.153.204: ICMP echo reply, id 17555, seq 279, length 64

在 对面机器上的报文路径与之对称

Node 到 Pod

- Node 到本 node 上的 pod

在 node111 ping pod1

在 veth host 端抓包,根据路由 10.244.153.204 dev calice0906292e2 scope link,生成报文时拿默认路由网卡的 ip 做源地址

20:15:23.174963 ee:ee:ee:ee:ee:ee > 32:21:45:c4:c5:d5, ethertype IPv4 (0x0800), length 98: 172.18.22.111 > 10.244.153.204: ICMP echo request, id 6, seq 9, length 64

20:15:23.175025 32:21:45:c4:c5:d5 > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 98: 10.244.153.204 > 172.18.22.111: ICMP echo reply, id 6, seq 9, length 64

在 veth 内 eth0 抓包

20:18:23.399015 ee:ee:ee:ee:ee:ee > 32:21:45:c4:c5:d5, ethertype IPv4 (0x0800), length 98: 172.18.22.111 > 10.244.153.204: ICMP echo request, id 6, seq 185, length 64

20:18:23.399049 32:21:45:c4:c5:d5 > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 98: 10.244.153.204 > 172.18.22.111: ICMP echo reply, id 6, seq 185, length 64

- Node 到其他 node 上的 pod

node111 到 pod3

在 node111 tunl0 抓包,根据路由 10.244.146.192/26 via 192.168.100.112 dev tunl0 proto bird onlink,源 IP 为 tunl0 ip

21:10:02.099557 ip: 10.244.153.192 > 10.244.146.205: ICMP echo request, id 11, seq 46, length 64

21:10:02.100595 ip: 10.244.146.205 > 10.244.153.192: ICMP echo reply, id 11, seq 46, length 64

在 ens10 抓包

21:10:18.124555 52:54:00:dc:c7:b4 > 52:54:00:d3:bf:21, ethertype IPv4 (0x0800), length 118: 192.168.100.111 > 192.168.100.112: 10.244.153.192 > 10.244.146.205: ICMP echo request, id 11, seq 62, length 64

21:10:18.129910 52:54:00:d3:bf:21 > 52:54:00:dc:c7:b4, ethertype IPv4 (0x0800), length 118: 192.168.100.112 > 192.168.100.111: 10.244.146.205 > 10.244.153.192: ICMP echo reply, id 11, seq 62, length 64

Pod 到 service

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-service ClusterIP 10.107.161.255 <none> 8080/TCP 2s

# kubectl get endpoints

NAME ENDPOINTS AGE

nginx-service 10.244.146.205:80,10.244.153.201:80 5s

- Pod 访问 service clusterIP

在 pod1 veth 对抓包,目的地之为 svcIP

21:27:42.690221 32:21:45:c4:c5:d5 > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 74: 10.244.153.204.38610 > 10.107.161.255.8080: Flags [S], seq 4133783852, win 64800, options [mss 1440,sackOK,TS val 3911662959 ecr 0,nop,wscale 7], length 0

21:27:42.690427 ee:ee:ee:ee:ee:ee > 32:21:45:c4:c5:d5, ethertype IPv4 (0x0800), length 74: 10.107.161.255.8080 > 10.244.153.204.38610: Flags [S.], seq 86025828, ack 4133783853, win 64260, options [mss 1440,sackOK,TS val 1534565294 ecr 3911662959,nop,wscale 7], length 0

在 pod2 veth 对抓包,源地址为 主机默认路由网卡 ip,目的地址为 pod2,目的端口为 80

21:27:42.690366 ee:ee:ee:ee:ee:ee > 2a:e7:de:3f:09:fb, ethertype IPv4 (0x0800), length 74: 10.244.153.204.38610 > 10.244.153.201.80: Flags [S], seq 4133783852, win 64800, options [mss 1440,sackOK,TS val 3911662959 ecr 0,nop,wscale 7], length 0

21:27:42.690404 2a:e7:de:3f:09:fb > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 74: 10.244.153.201.80 > 10.244.153.204.38610: Flags [S.], seq 86025828, ack 4133783853, win 64260, options [mss 1440,sackOK,TS val 1534565294 ecr 3911662959,nop,wscale 7], length 0

去 service dnat 成后端 IP 转到 pod2,pod2 回复 pod1,再 snat 成 svcIP。

后端为 跨节点的 pod3 和上面相同

Node 到 service

- Node 访问 service clusterIP

本节点 pod 时

Dnat 成 pod2 ip,根据默认路由网卡 IP,构造报文

22:07:10.054483 ee:ee:ee:ee:ee:ee > 2a:e7:de:3f:09:fb, ethertype IPv4 (0x0800), length 74: 172.18.22.111.13579 > 10.244.153.201.80: Flags [S], seq 948451555, win 65495, options [mss 65495,sackOK,TS val 519032124 ecr 0,nop,wscale 7], length 0

22:07:10.054534 2a:e7:de:3f:09:fb > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 74: 10.244.153.201.80 > 172.18.22.111.13579: Flags [S.], seq 2350447822, ack 948451556, win 64260, options [mss 1440,sackOK,TS val 319012716 ecr 519032124,nop,wscale 7], length 0

跨节点 pod 时

Dnat 成 pod3 ip,根据路由用 node111 ippool 的 网关去请求

22:07:19.881187 ee:ee:ee:ee:ee:ee > 4e:11:e1:74:9d:6c, ethertype IPv4 (0x0800), length 74: 10.244.153.192.14543 > 10.244.146.205.http: Flags [S], seq 1990644142, win 65495, options [mss 65495,sackOK,TS val 519041957 ecr 0,nop,wscale 7], length 0

22:07:19.881227 4e:11:e1:74:9d:6c > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 74: 10.244.146.205.http > 10.244.153.192.14543: Flags [S.], seq 2030705031, ack 1990644143, win 64260, options [mss 1440,sackOK,TS val 1033275778 ecr 519041957,nop,wscale 7], length 0

外部到 svc

default nginx-service NodePort 10.107.161.255 8080:30080/TCP

在主机被访问 IP 网卡抓包

22:13:00.656471 ac:7e:8a:6c:41:c4 > 52:54:00:ba:dc:62, ethertype IPv4 (0x0800), length 149: 172.20.151.77.47334 > 172.18.22.111.30080: Flags [P.], seq 1:84, ack 1, win 229, options [nop,nop,TS val 430411787 ecr 1033616455], length 83

22:13:00.657729 52:54:00:ba:dc:62 > ac:7e:8a:6c:41:c4, ethertype IPv4 (0x0800), length 66: 172.18.22.111.30080 > 172.20.151.77.47334: Flags [.], ack 84, win 502, options [nop,nop,TS val 1033616544 ecr 430411787], length

Chain KUBE-NODE-PORT (1 references)

target prot opt source destination

KUBE-MARK-MASQ tcp -- 0.0.0.0/0 0.0.0.0/0 /* Kubernetes nodeport TCP port for masquerade purpose */ match-set KUBE-NODE-PORT-TCP dst

Masquerade 转为

22:15:32.507099 ee:ee:ee:ee:ee:ee > 2a:e7:de:3f:09:fb, ethertype IPv4 (0x0800), length 74: 172.18.22.111.10229 > 10.244.153.201.80: Flags [S], seq 450784444, win 29200, options [mss 1460,sackOK,TS val 430563700 ecr 0,nop,wscale 7], length 0

22:15:32.507198 2a:e7:de:3f:09:fb > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 74: 10.244.153.201.80 > 172.18.22.111.10229: Flags [S.], seq 3057963543, ack 450784445, win 64260, options [mss 1440,sackOK,TS val 319515169 ecr 430563700,nop,wscale 7], length 0

如果 后端不在本节点

Masquerade 转为

22:31:15.850370 ee:ee:ee:ee:ee:ee > 4e:11:e1:74:9d:6c, ethertype IPv4 (0x0800), length 74: 10.244.153.192.50499 > 10.244.146.205.http: Flags [S], seq 1374007914, win 29200, options [mss 1460,sackOK,TS val 431507052 ecr 0,nop,wscale 7], length 0

22:31:15.850422 4e:11:e1:74:9d:6c > ee:ee:ee:ee:ee:ee, ethertype IPv4 (0x0800), length 74: 10.244.146.205.http > 10.244.153.192.50499: Flags [S.], seq 3861438831, ack 1374007915, win 64260, options [mss 1440,sackOK,TS val 1034711747 ecr 431507052,nop,wscale 7], length 0

NetworkPolicy

为 pod1 打上 role == pod1

为 pod2,pod3 打上 app == nginx

apiVersion: projectcalico.org/v3

kind: NetworkPolicy

metadata:name: allow-tcp-80namespace: default

spec:selector: app == 'nginx'ingress:- action: Allowprotocol: TCPsource:selector: role == 'pod1'destination:ports:- 80

应用后查看 iptables 流程

# iptables -nL

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

cali-OUTPUT all -- 0.0.0.0/0 0.0.0.0/0 /* cali:tVnHkvAo15HuiPy0 */

// 下的是 ingress,则在宿主机上看到的是 Output chain,发给 pod 时的规则Chain cali-OUTPUT (1 references)

target prot opt source destination

cali-forward-endpoint-mark all -- 0.0.0.0/0 0.0.0.0/0 [goto] /* cali:5Z67OUUpTOM7Xa1a */ mark match ! 0x0/0xfff00000Chain cali-forward-endpoint-mark (1 references)

target prot opt source destination

cali-to-wl-dispatch all -- 0.0.0.0/0 0.0.0.0/0 /* cali:aFl0WFKRxDqj8oA6 */Chain cali-to-wl-dispatch (2 references)

target prot opt source destination

cali-tw-calibd2348b4f67 all -- 0.0.0.0/0 0.0.0.0/0 [goto] /* cali:m9Fd7J2kx1zys3Gw */Chain cali-tw-calibd2348b4f67 (1 references)

target prot opt source destination

MARK all -- 0.0.0.0/0 0.0.0.0/0 /* cali:GqobtmvaGkGX_I6Q */ /* Start of policies */ MARK and 0xfffdffff

cali-pi-_w6c3i7lsXCdtfGqcxq5 all -- 0.0.0.0/0 0.0.0.0/0 /* cali:Ew7qVfwras3_yV_L */ mark match 0x0/0x20000Chain cali-pi-_w6c3i7lsXCdtfGqcxq5 (1 references)

target prot opt source destination

MARK tcp -- 0.0.0.0/0 0.0.0.0/0 /* cali:O4FzgAjAMQ8CsxAM */ /* Policy default/default.allow-tcp-80 ingress */ match-set cali40s:SPeglQlTBmfidv00S2cBaDC src multiport dports 80 MARK or 0x10000

// 匹配策略的打 mark 0x10000 accept