地方门户网站app济宁医院网站建设

1、k8s出现的背景:

随着服务器上的应用增多,需求的千奇百怪,有的应用不希望被外网访问,有的部署的时候,要求内存要达到多少G,每次都需要登录各个服务器上执行操作更新,不仅容易出错,还很浪费时间。

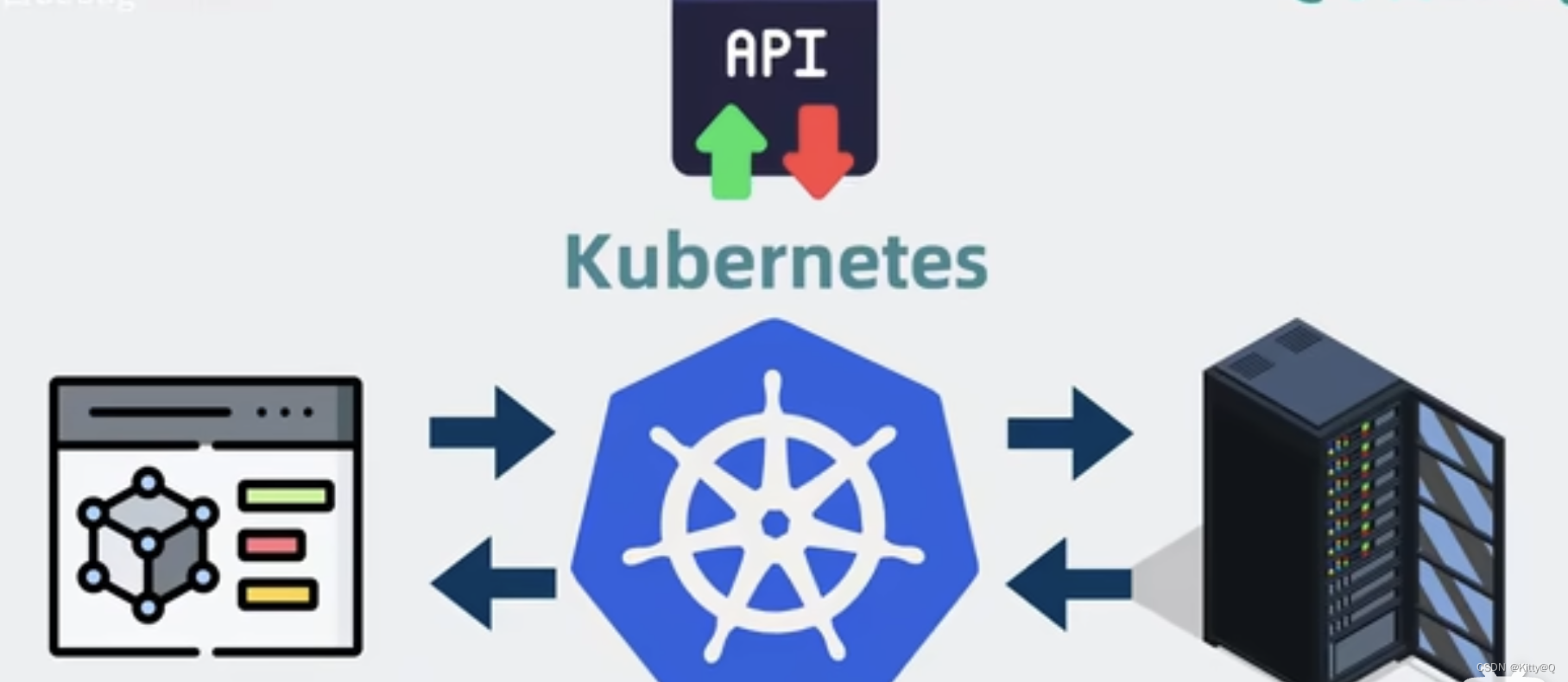

2、k8s是什么?

介于应用服务和服务器之间,能够通过策略协调和管理多个应用服务,只需要一个yaml文件配置,定义应用的部署顺序等信息,就能自动部署应用到各个服务器上,能让它们自动阔缩容,而且做到挂了后在其他服务器上自动部署应用。

本质上是应用服务和服务器之间的中间层,通过暴露一系列API能力,让我们简化服务的部署运维流程。并且中大厂利用这些api能力,搭建自己的服务管理平台,程序员不需要再敲kubectl命令,直接在界面上点点就能完成服务的部署和扩容等操作。

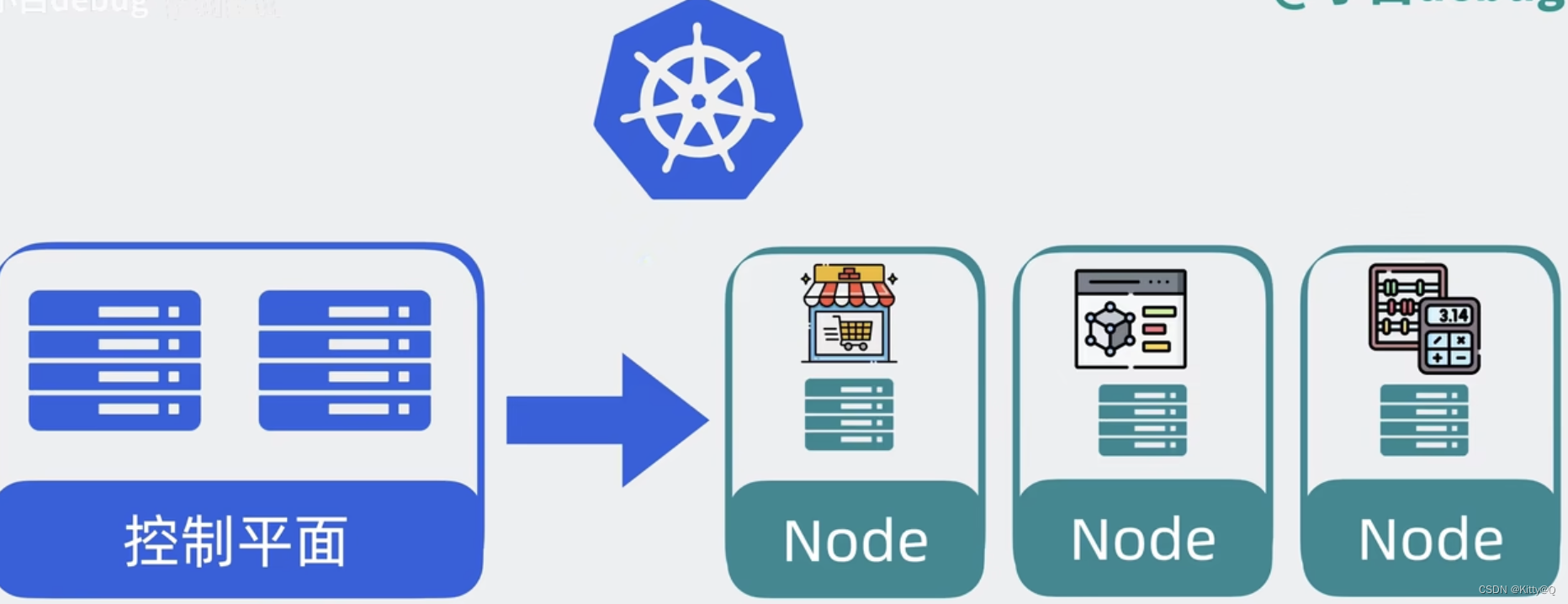

3、k8s架构原理:

k8s分成两部分,一部分叫做控制平面(control plane),另一部分叫做工作节点(Node)。

控制平面负责控制和管理各个node,node负责运行各个应用服务。

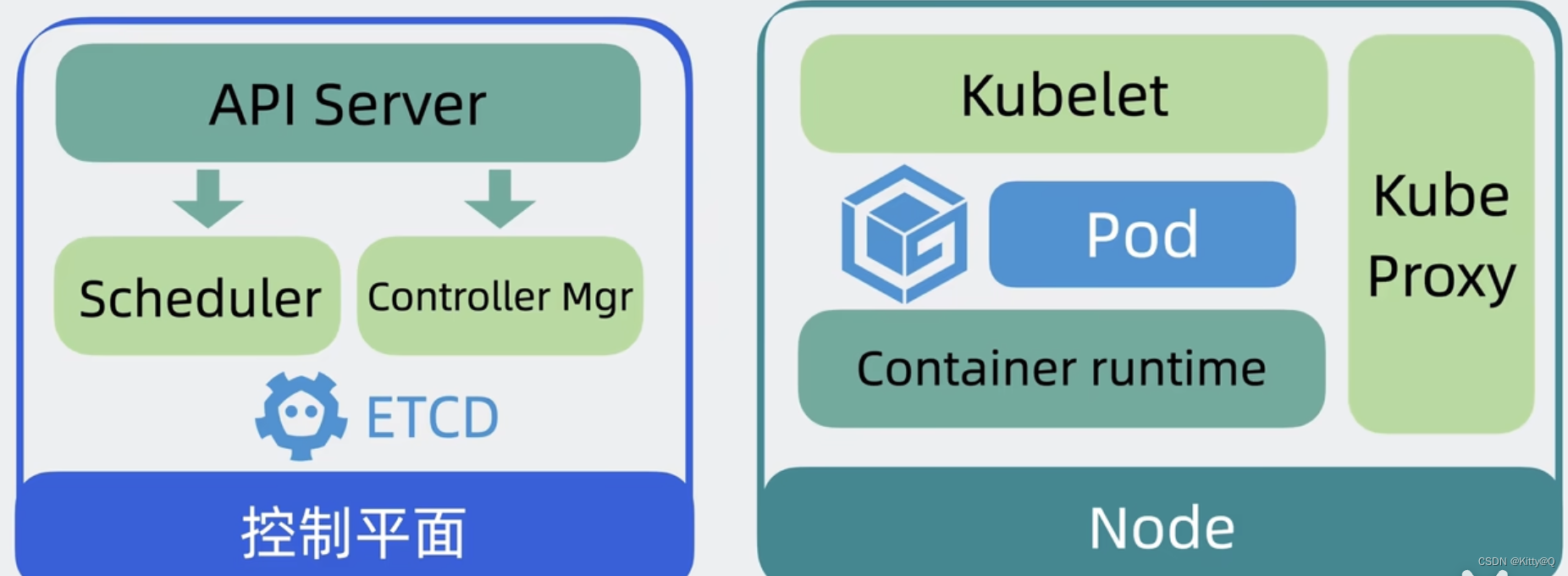

(1)控制平面内部组件

API SERVER作用: 以前我们需要登录到每台服务器上手动执行各种命令,现在我们只需要调用k8s提供的api接口就能操作这些服务资源,这些接口都是由API SERVER组件提供的。

scheduler作用: 以前我们需要到处看哪台服务器CPU和内存资源充足,然后才能部署应用,现在可以通过控制平面中的scheduler调度器来完成。

controller Mgr 作用:以前我们需要手动创建和关闭服务,现在这部分功能由controller Mgr控制器管理器完成。

ETCD:由于API SERVER、scheduler、controller Mgr 会产生一些数据,这些数据需要保存到存储层ETCD中。

(2)NODE内部组件

node是实际的工作节点,它既可以是裸机服务器,也可以是虚拟机,它会负责实际运行各个应用服务。多个应用服务共享一台node上的内存和cpu等计算资源。以前我们需要将代码上传到服务器,如今用了k8s以后,我们只需要将服务代码打包成容器镜像(container image)就能一行命令将它部署。

容器镜像的含义:将应用代码和依赖的系统环境打了个压缩包,在任意一台机器上解压缩,就能正常运行服务。

容器镜像图:

为了下载和部署镜像,node中会有一个容器运行时组件(container runtime),每个服务都可以认为是一个container,并且大多数时候,我们还会给应用服务搭配一个日志收集器container,或监控采集器container,这几个container共同组成一个pod,pod运行在node上,k8s可以将pod从某个node调度到另一个node,还能以pod为单位,去做重启和动态扩缩容的操作,所以说pod时k8s中最小的调度单位。

pod的构成图:

node服务器节点图:

kubelet的作用: 控制平面会通过controller mgr控制node创建和关闭服务,node通过kubelet接受controller mgr的命令并执行。kubelet主要负责管理和监控pod。

kube proxy:负责node中的网络通信功能,外部请求通过它转发到pod中。

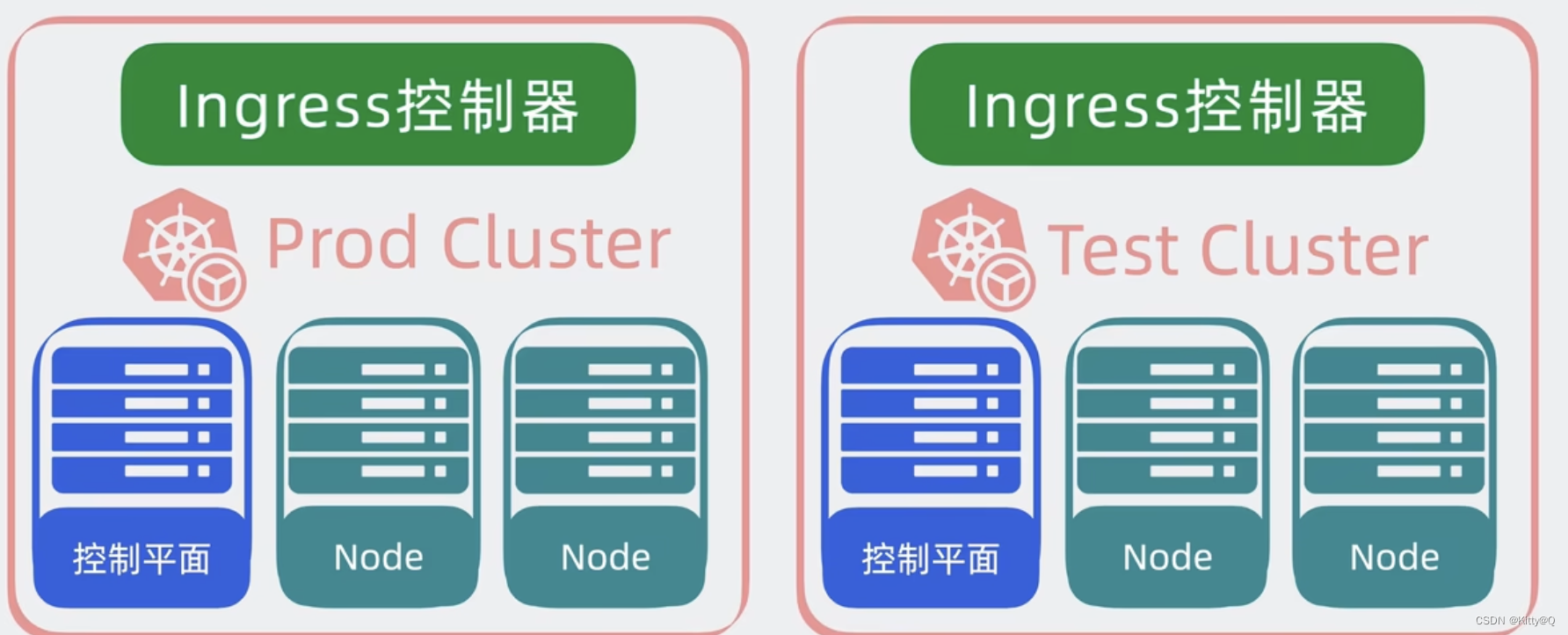

4、cluster是什么?

控制平面和node共同构成了一个cluster(集群),在单位里,我们一般会构建多个集群,如测试环境一个集群,正式环境用另外一个集群,同时为了将集群内部的服务,暴露给外部用户使用,我们一般会部署一个入口控制器,如ingress控制器,它可以提供一个入口,让外部用户访问集群内部服务。

4、kubectrl是什么?

通过kubectrl命令行工具执行命令,其内部就会调用k8s的API。

5、怎么部署服务?

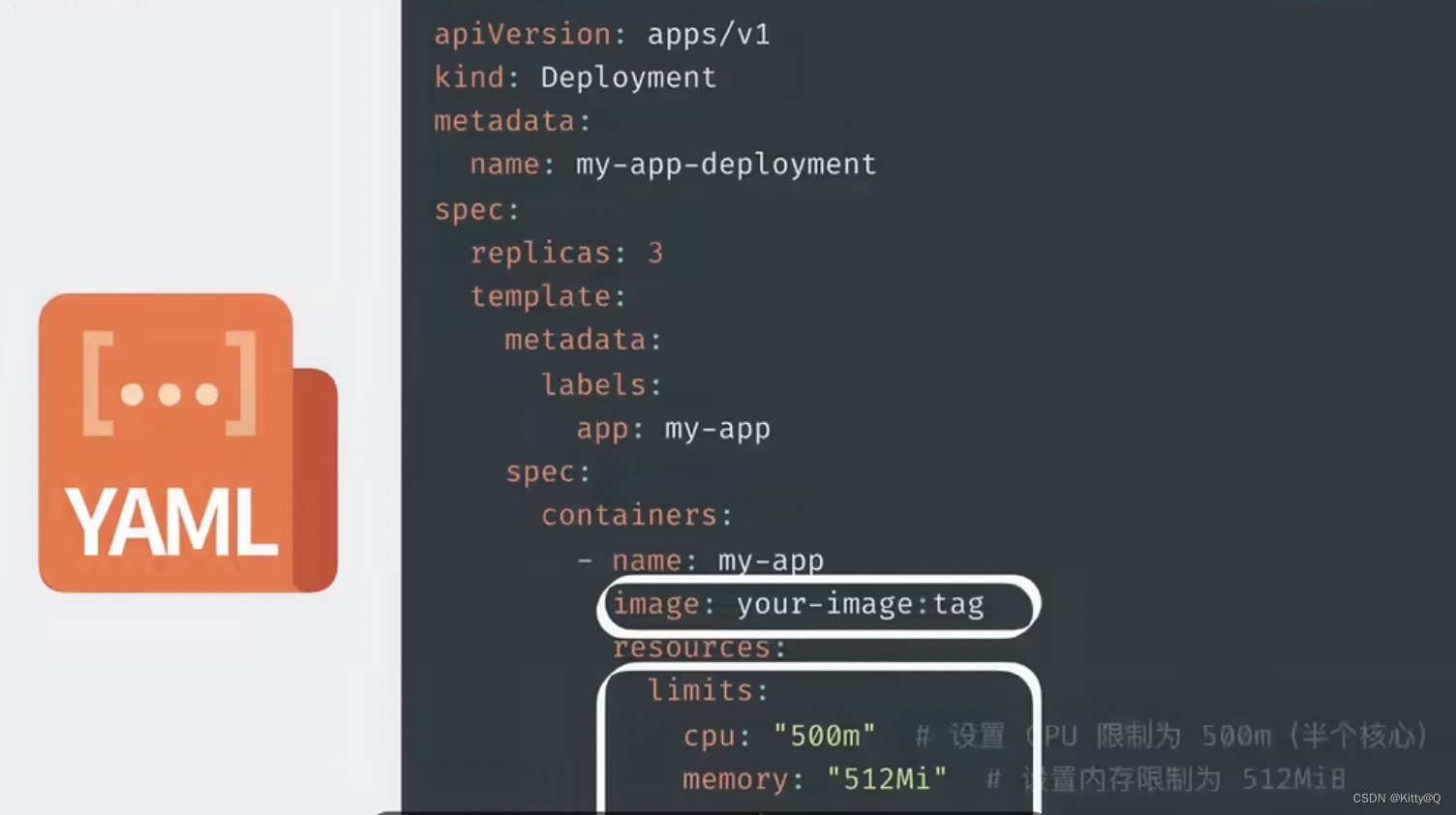

首先先编写yaml文件,在里面定义,在里面定义pod里用到了哪些镜像,占用多少内存和cpu信息,然后使用kubectrl命令行工具执行kubectrl apply yaml文件,此时kubectrl将读取和解析yaml文件,将解析后的对象,通过api请求发送给k8s控制平面的API SERVER,API SERVER会根据要求驱使scheduler通过etcd提供的数据寻找合适的node,再让controller manager控制node创建服务。

node的内部的kubelet收到命令后,会开始基于container runtime组件去拉取镜像,创建容器,最终pod创建。则服务完成创建。整个过程只需要写一次yaml文件和执行一次kubelet命令。

yaml文件内容图:

kubectl调用k8s图:

node的内部的kubelet收到命令后图:

6、部署完服务后,服务是怎么调用的?

在浏览器上发送http请求,到达k8s集群的ingress控制器,然后请求会转发到k8s中某个node的kube proxy上,再找到对应的pod后,然后转发到容器内部的服务中,处理结果原路返回,这就完成了一次服务调用。