专门做黄昏恋的网站备案网站建设承诺书

在之前我出过一篇博客介绍了模版的初阶:面向对象程序设计(C++)模版初阶,接下来我们将进行模版的进阶学习,介绍关于更多模版的知识

1.非类型模版参数

模板参数分类类型形参与非类型形参

类型形参即:出现在模板参数列表中,跟在class或者typename之类的参数类型名称

非类型形参,就是用一个常量作为类(函数)模板的一个参数,在类(函数)模板中可将该参数当成常量来使用

非类型模版参数相较于宏更加灵活,array中就使用到了非类型模版参数,相当于静态数组,可以根据需要开辟指定大小的数组,array的好处就是可以对于越界检查很有效,并且在栈上开空间,比在堆上更加高效,栈上开空间是向下生长,从高地址到低地址

一般来说普通数组会在数组末尾设置标志位,如果标志位被改变就越界报错,所以一般对于读则不会越界警告,而写入数据会报错

注意:

1. 浮点数、类对象以及字符串是不允许作为非类型模板参数的

2. 非类型的模板参数必须在编译期就能确认结果

非类型模版

相比与宏更加灵活

只能用于整型,其他类型不可以,char/int/short/bool等整型

C++20支持double类型

template<size_t N>

class Stack

{

private:int _a[N];int _top;

};int main()

{Stack<5> a1;Stack<10> a2;return 0;

}------------------------------------------------------------------------------------------

使用了非类型模版的结构

array:静态数组

对于越界检查很有效

在栈上开空间,比在堆上更加高效

栈上开空间是向下生长,从高地址到低地址int main()

{//一般来说普通数组会在数组末尾设置标志位,如果标志位被改变就越界报错//所以一般对于读则不会越界警告,而写会int a[10] = { 0 };cout << a[11] << endl;a[11] = 1;//静态数组对于越界访问的读与写都会报错array<int, 10> b;cout << b[11] << endl;b[11] = 1;return 0;

}2.模版的特化

通常情况下,使用模板可以实现一些与类型无关的代码,但对于一些特殊类型的可能会得到一些 错误的结果,需要特殊处理,比如:实现了一个专门用来进行小于比较的函数模板

注意:一般情况下如果函数模板遇到不能处理或者处理有误的类型,为了实现简单通常都是将该 函数直接给出

函数模板的特化步骤:

1. 必须要先有一个基础的函数模板

2. 关键字template后面接一对空的尖括号<>

3. 函数名后跟一对尖括号,尖括号中指定需要特化的类型

4. 函数形参表: 必须要和模板函数的基础参数类型完全相同,如果不同编译器可能会报一些奇怪的错误

特化

template<class T>

bool LessFun(const T& left, const T& right)

{return left < right;

}特化

这里注意上面的const修饰的是left本身,所以下面特化的const需要在*右边

template<>

bool LessFun<Date*>(Date* const& left, Date* const& right)

{return *left < *right;

}//推荐

bool LessFun(Date* const& left, Date* const& right)

{return *left < *right;

}2.1全特化

全特化即是将模板参数列表中所有的参数都确定化

类模版特化

1.全特化

template<class T1, class T2>

class Data

{

public:Data() { cout << "Data<T1,T2>" << endl; }

private:T1 _d1;T2 _d2;

};template<>

class Data<int,char>

{

public:Data() { cout << "Data<int,char>" << endl };

}

2.2偏特化

偏特化:任何针对模版参数进一步进行条件限制设计的特化版本

//2.半特化、偏特化

template<class T1>

class Data<T1, double>

{

public:Data() { cout << "Data<T1,double>" << endl };

};//偏特化特殊类型

//传的类型是指针

template<class T1,class T2>

class Data<T1*, T2*>

{

public:Data() { cout << "Data<T1*,T2*>" << endl };

};//传的是引用

template<class T1,class T2>

class Data<T1&, T2&>

{

public:Data() { cout << "Data<T1*,T2*>" << endl };

};

2.2.1部分特化

将模板参数类表中的一部分参数特化

// 将第二个参数特化为inttemplate <class T1> class Data<T1, int>{public:Data() {cout<<"Data<T1, int>" <<endl;}private:T1 _d1;int _d2;

}; 2.2.2参数更进一步限制

偏特化并不仅仅是指特化部分参数,而是针对模板参数更进一步的条件限制所设计出来的一 个特化版本

//两个参数偏特化为指针类型 template <typename T1, typename T2> class Data <T1*, T2*>

{ public:Data() {cout<<"Data<T1*, T2*>" <<endl;}private:T1 _d1;T2 _d2;

};//两个参数偏特化为引用类型template <typename T1, typename T2>class Data <T1&, T2&>{public:Data(const T1& d1, const T2& d2): _d1(d1), _d2(d2){cout<<"Data<T1&, T2&>" <<endl;}private:const T1 & _d1;const T2 & _d2; };void test2 ()

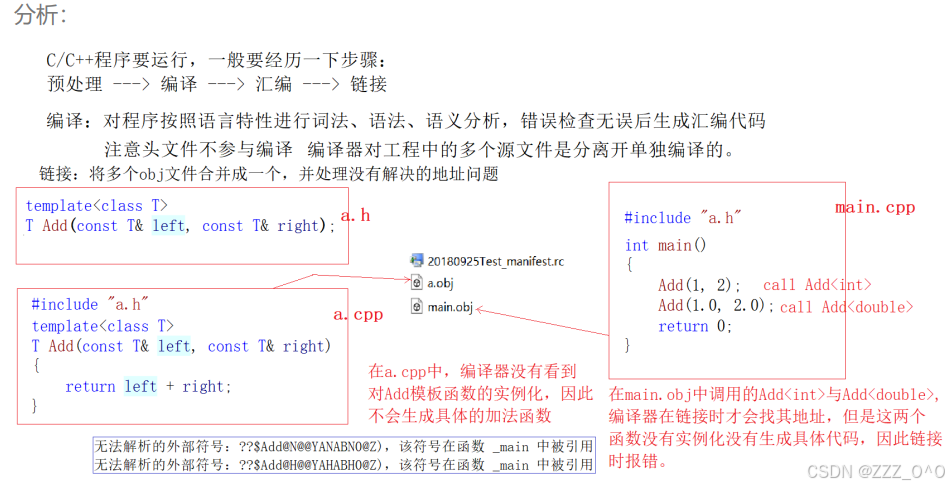

{Data<double , int> d1; // 调用特化的int版本Data<int , double> d2; // 调用基础的模板 Data<int *, int*> d3; // 调用特化的指针版本Data<int&, int&> d4(1, 2); // 调用特化的指针版本}3.模版的分离编译

一般地,模版不建议声明与定义分离,如果使用的话有如下解决方法:

1. 将声明和定义放到一个文件 "xxx.hpp" 里面或者xxx.h其实也是可以的。推荐使用这种

2. 模板定义的位置显式实例化。这种方法不实用,不推荐使用